Time flies when you are having fun and sometimes it moves so fast, events might overtake you whilst you are scribbling your two cents. Not too long ago I wrote about the challenges of regulating AI and why something must be done. The general thought was (and still is) that balancing the scales of innovation and regulation in most cases presents a unique challenge: intervene prematurely, and you risk stifling nascent potential, possibly quelling its growth before it even flourishes. Conversely, delay or minimal action can lead to situations where such inaction or oversight results in significant repercussions. However, the swift ascent of AI into the mainstream spotlight has injected a pressing immediacy into debates surrounding its regulation and after having delved into the ramifications of allowing AI to evolve without checks, the conclusion was that something should be done. Naturally, lawmakers and regulators haven’t been idle and that is what this article is about. This and an aspect that might have received only a fraction of the attention, but that could significantly shape the future of Artificial Intelligence.

Tightening the screws

It is somewhat ironic that as I put the finishing touches on this article, tech bosses are getting grilled by members of the US senate as the dawn of the AI era has not only revolutionized industries. It has also caught the attention of regulators worldwide, leading to a surge in legislative initiatives and regulatory actions aimed at overseeing the development and application of artificial intelligence technologies. In the United States, the Federal Trade Commission (FTC) has taken a proactive stance by ordering major tech companies like Alphabet, Anthropic, Amazon, Microsoft, and OpenAI to submit detailed information about their AI partnerships. This action is a clear indicator of the FTC’s commitment to understanding and potentially regulating the field of AI, reflecting a broader pattern of regulatory bodies attempting to keep pace with the rapid advancements in AI technology.

In addition to the FTC’s move, other U.S. agencies have also begun to tread into the realm of AI regulation. The Securities and Exchange Commission (SEC) is exploring the implications of AI in the financial sector, focusing on AI-driven trading algorithms that could pose risks to market stability. The Food and Drug Administration (FDA) is scrutinizing AI applications in healthcare, particularly those related to diagnostic tools and personalized medicine, ensuring they meet stringent safety and efficacy standards.

Across the Atlantic, the European Commission has been equally vigilant. The Commission’s recent investigation into Microsoft’s investment in OpenAI signifies a growing concern over the influence of large tech corporations in shaping AI’s future. Concurrently, the European Parliament has been debating the ethical implications of AI, leading to proposals for new regulations that would govern AI usage across the EU, focusing on privacy, data protection, and transparency.

In the United Kingdom, the government has initiated a comprehensive review of AI technologies, examining their impact on various sectors, including finance, healthcare, and transportation. The UK’s approach aims to balance innovation with public safety, ensuring that AI systems are developed and deployed responsibly.

China, a significant player in the AI arena, has also begun to implement regulations. The Chinese government’s focus has been on ensuring that AI development aligns with national interests, with specific guidelines aimed at preventing security risks and promoting ethical standards in AI applications. This includes regulations on data privacy, AI-driven content moderation, and the use of AI in surveillance and social scoring systems.

Similarly, countries like Canada, Japan, and Australia have launched national AI strategies that include regulatory frameworks. Canada’s focus on ethical AI, Japan’s emphasis on AI in economic revitalization, and Australia’s consideration of AI’s impact on the workforce and privacy issues are testament to the diverse approaches being taken globally.

In India, the government has initiated discussions around a national AI policy, focusing on leveraging AI for economic growth while addressing issues like data privacy, security, and ethical AI development.

Moreover, international organizations like the OECD and the United Nations have been instrumental in setting global standards and principles for AI. These include guidelines on AI ethics, transparency, and ensuring AI’s benefits are distributed equitably across societies.

This global patchwork of regulatory initiatives and legislative actions reflects a common acknowledgment of AI’s transformative potential and the need to manage its risks. As AI continues to evolve and permeate various facets of society, these regulatory efforts will play a crucial role in shaping its trajectory, ensuring it serves the greater good while mitigating adverse impacts. The FTC’s recent actions, alongside those of other national and international bodies, underscore a pivotal moment in the governance of AI, marking a transition from laissez-faire development to a more structured and responsible approach.

The European Question

I believe it is fair to summarize at this point that the rapid advancement of artificial intelligence (AI) technologies has lead to increased scrutiny from regulators globally. So far, so good. However, a more complex development is unfolding in the European Union, particularly in Germany, that has been flying somewhat under the radar. German weekly “Die Zeit” reported that the EU itself has been diligently working on an AI regulation, known as the AI Act, but now faces significant challenges in securing approval from member states, including Germany. The AI Act aims to establish rules for high-risk AI applications, such as those impacting employment decisions or social benefits. This legislation has seen a surprising alliance of researchers, entrepreneurs, and civil society organizations, who are urging the German government to endorse the Act, despite some reservations.

The AI Act, which was fiercely debated in the EU, raises questions about the appropriate level of regulation for AI systems like ChatGPT and automated surveillance technologies. While the Act does not entirely ban biometric surveillance, it imposes strict limitations, allowing exceptions for issues like combating terrorism or finding human trafficking victims. The Act also includes provisions for ‘foundation models,’ like large language models, requiring transparency about their functioning and imposing additional obligations for more powerful systems. The stance of the German government, particularly the Ministry of Transport and Digital Infrastructure, is pivotal in this scenario. The Ministry has expressed concerns about stifling innovation and the bureaucratic burden on small and medium-sized enterprises. Daniel Abbou, the head of the AI Federal Association in Germany, emphasizes the need for legal certainty for businesses and suggests that delaying the Act could be detrimental to companies waiting to align their AI projects with the new regulations.

This situation raises a crucial question: Is the AI Act’s approach to regulating AI too restrictive, potentially hindering innovation? The Act’s critics, including some in the German government, argue that it could be less dramatic if the legislation fails, allowing for a more innovation-friendly approach in the future.

On the other hand, proponents of the AI Act, such as Carla Hustedt from the Mercator Foundation and Sergey Lagodinsky, a member of the European Parliament, argue that the Act represents a necessary step in establishing minimum standards for AI applications. They contend that failing to pass the current proposal could lead to a weaker law in the future, especially given the political shifts in Europe. The debate over the AI Act highlights the delicate balance between regulating AI to ensure safety and ethical standards and fostering an environment conducive to innovation. As the EU, the UK, and the US continue to navigate this complex landscape, the outcomes of these regulatory efforts will significantly impact the future development and application of AI technologies globally. The AI Act, if passed, could serve as a model for other countries, demonstrating how to regulate AI while still encouraging technological advancement.

Where next for AI regulation?

One might think now that this is just another prime example of the complex system of EU legislation and its member states, but we should stop at the borders of the Old World. Looking at the brave new world of AI regulation worldwide, one can’t help but raise an eyebrow at the grand tapestry of global efforts – a mishmash of ambition, caution, and the occasional bureaucratic tango. It’s like watching an intricate dance, where every step towards regulation is a step into largely uncharted territory. The urgency for regulating AI is apparent but finding a solution couldn’t be further from being a done deal.

Given the nature of the beast global collaboration and consistency in regulation seems indicated but is it more than an admirable goal. As this and other examples of regulating innovation have taught us, getting the world to sing in harmony is akin to herding cats – a commendable endeavor, but one fraught with discordant meows. Each player on the global stage brings their unique tune, making this orchestration less of a symphony and more of a curious experimental jazz piece.

As for the future challenges and the rapid evolution of AI, we’re in a race where the finish line keeps moving. Regulators are lacing up their running shoes, only to find that AI is already a few laps ahead, breaking records in ways we haven’t even figured out how to measure yet. In a way just another classic example of flawed attempts to control a disruptive technology.

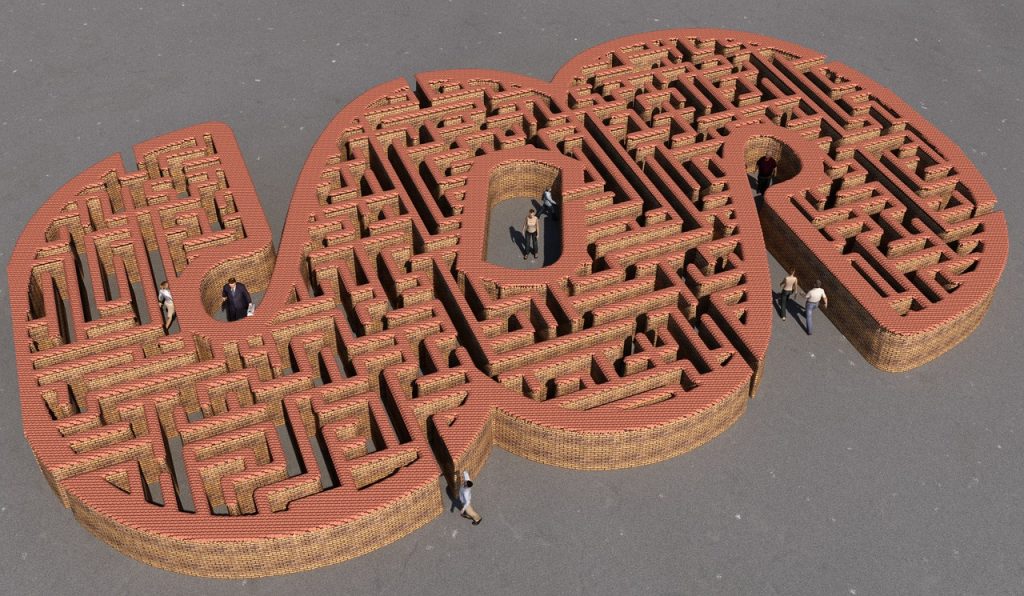

Maybe in the end, this journey of AI regulation is indeed less about drafting the perfect rules and more about navigating a labyrinth with a slightly faulty compass. We’re charting a course through unexplored terrain, armed with good intentions and a hope that our regulatory map is reading true north. As we step forward, let’s do so with a blend of optimism and a healthy dose of skepticism, ready to embrace the marvels of AI while keeping an eye out for any digital dragons lurking around the corner. After all, in the world of AI, expect the unexpected – and maybe keep a virtual shield handy, just in case.

——–

Disclaimer: Regarding the picture above the title, the paragraph as a maze is a piece of genius I found on Pixabay – all credit go to the author/artist. Also, as always, I’m trying to be completely transparent about affiliations, conflicts of interest, my expressed views and liability: Like anywhere else on this website, the views and opinions expressed are solely those of the authors and other contributors. The material information contained on this website is for general information purposes only. I endeavor to keep this information correct and up-to-date, I do not accept any liability for any falls in accurate or incomplete information or damages arising from technical issues as well as damages arising from clicking on or relying on third-party links. I am not responsible for outside links and information is contained in this article nor does it contain any referrals or affiliations with any of the producers or companies mentioned. As I said, the opinions my own, no liability, just thought it would be important to make this clear. Thanks!